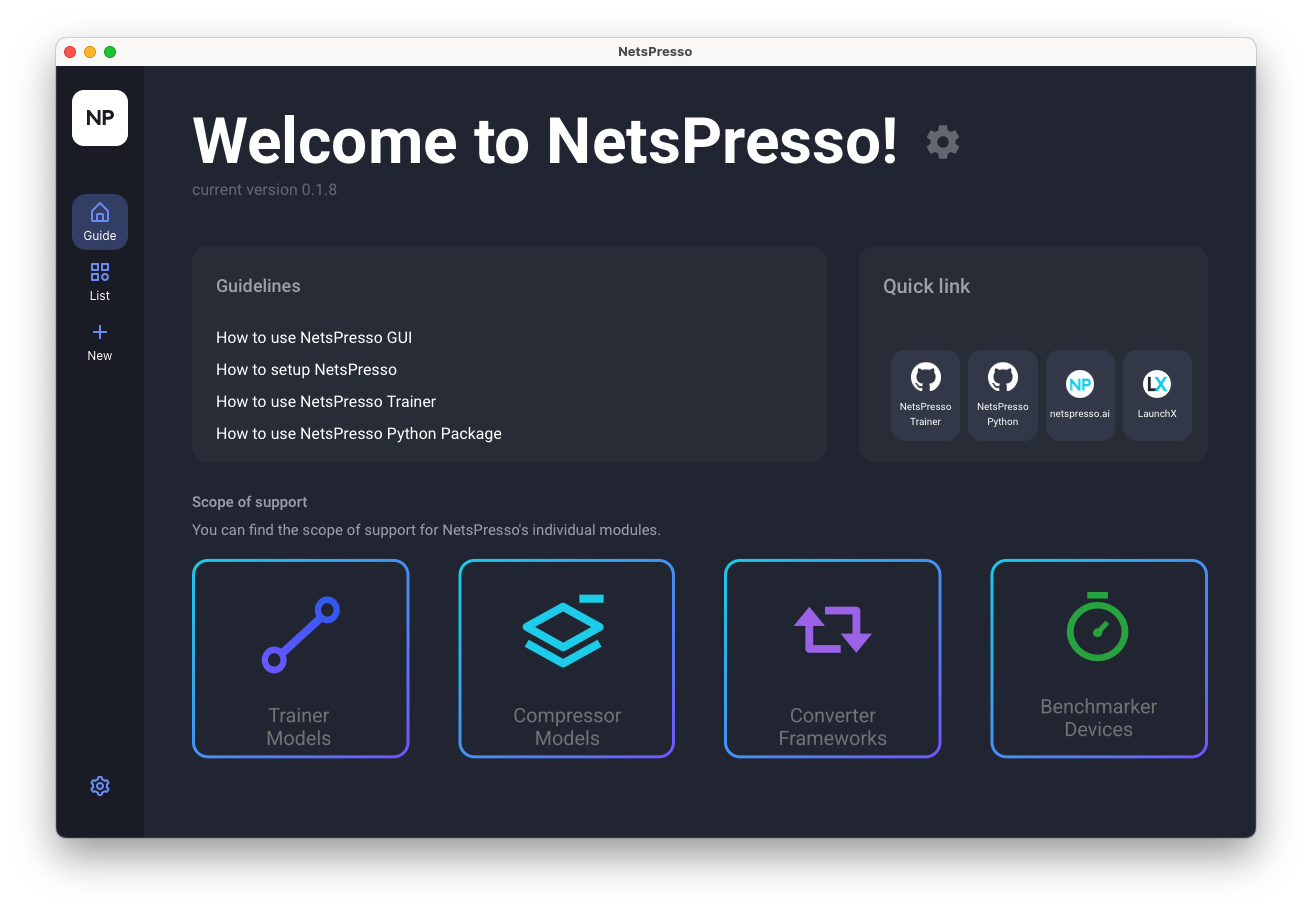

NetsPresso GUI

NetsPresso is a hardware-aware AI model optimization tool. The GUI client helps users utilize NetsPresso more intuitively to train models and optimize them for specific devices. According to the optimization pipeline, users can perform tasks such as pruning, framework conversion, or quantization.

Download link

You can download the NetsPresso GUI from the following link: Download the NetsPresso GUI.

Please note that while the NetsPresso GUI can be installed on various systems, the training process must be run on a GPU server compatible with Linux.

Main Function

The NetsPresso GUI provides enhanced usability. The key features available are as follows.

- Code Generation: This feature allows users to easily adapt to coding environments. By setting the configuration number for tasks such as training, compression, conversion, and benchmarking, users can quickly generate code, which can be executed through the provided Python package.

- Users can manage their projects by tracking the status and history of experiments. Check the status and experiment lists on the main page.

- Visualization: Experiment results are visually represented. The results of experiments are displayed in one place, offering a clear overview of your progress and outcomes.

- Download: Save your optimized models directly to your local computer.

User Scenario

Depending on how the optimization method is chosen, there are two main approaches available for using NetsPresso.

Scenario1. Compression Pipeline

For lightweight models considering structured pruning or filter decomposition, use the NetsPresso Compression Pipeline.

- Compress the models to save computational cost through structured pruning or filter decomposition.

- Retrain the model to recover the accuracy after compression and evaluate its performance.

- Use Benchmark to measure the FPS (Frames Per Second) of the model on the device.

- Easily compare the results of compression through Visualization.

Scenario2. Conversion / Quantization Pipeline

Choose the optimization method for models that suit your device. We support both quantization and framework conversion.

- Framework conversion of the model that is compatible with your device.

- Quantization is also available as an option.

- Use Benchmark to measure the FPS (Frames Per Second) of the model on the device.

Updated 19 days ago