Step 4: Convert the model framework

NetsPresso Converter supports model framework conversion tailored to the target device. The NetsPresso Converter module can be executed through the converter module in the NetsPresso Python package(link) or after uploading the model in Benchmark Studio(link). In Training Studio, models can be converted directly without the need to upload trained or compressed models.

Range of Support

This range of support is based on the Python package, whereas in Training Studio, trained models are converted to ONNX format.

| Target/Source Framework | ONNX | TensorRT | TensorFlow |

|---|---|---|---|

| TensorRT | ✅ | ||

| DRP-AI | ✅ | ||

| OpenVINO | ✅ | ||

| TensorFlow Lite | ✅ | ✅ | ✅ |

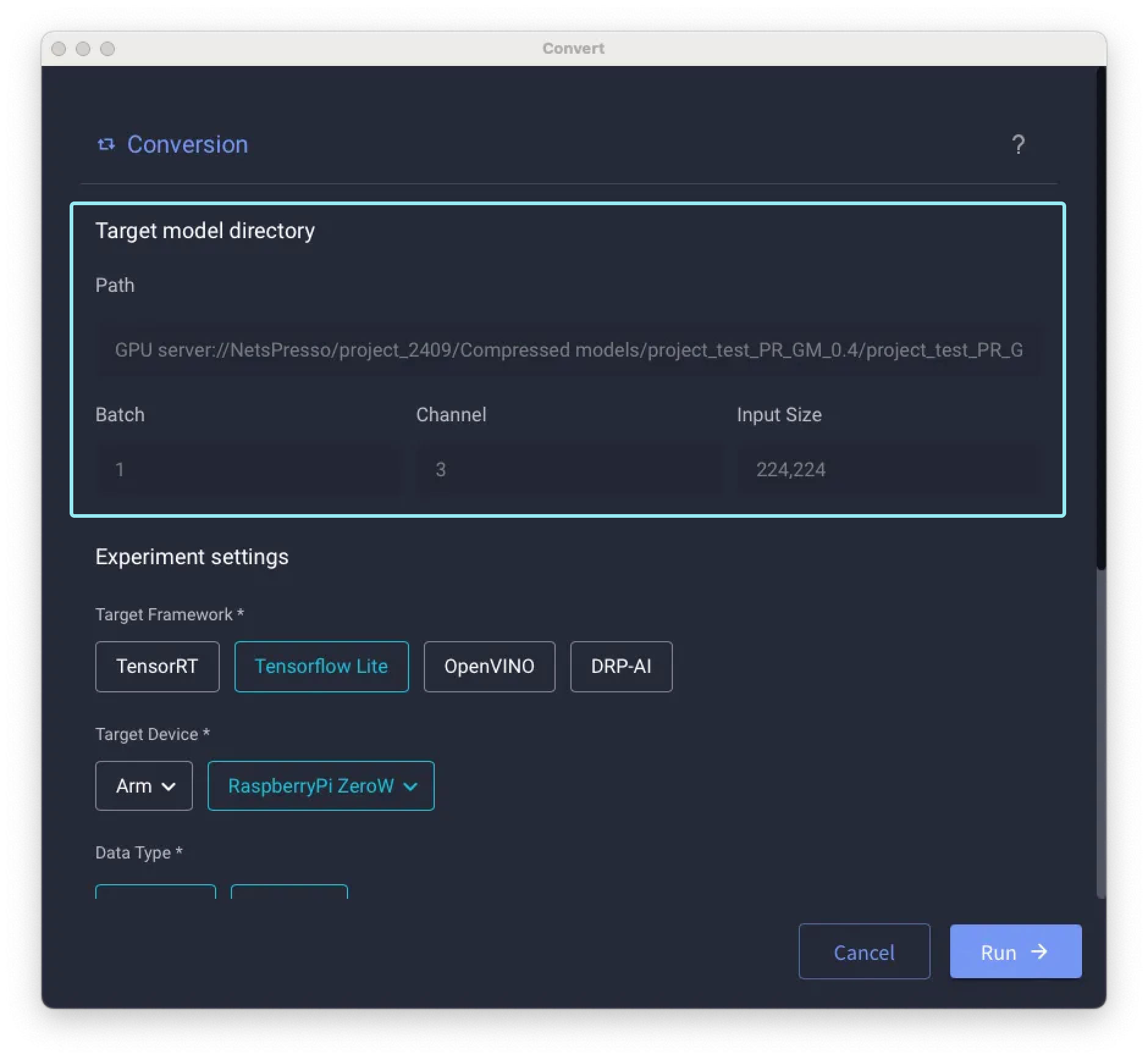

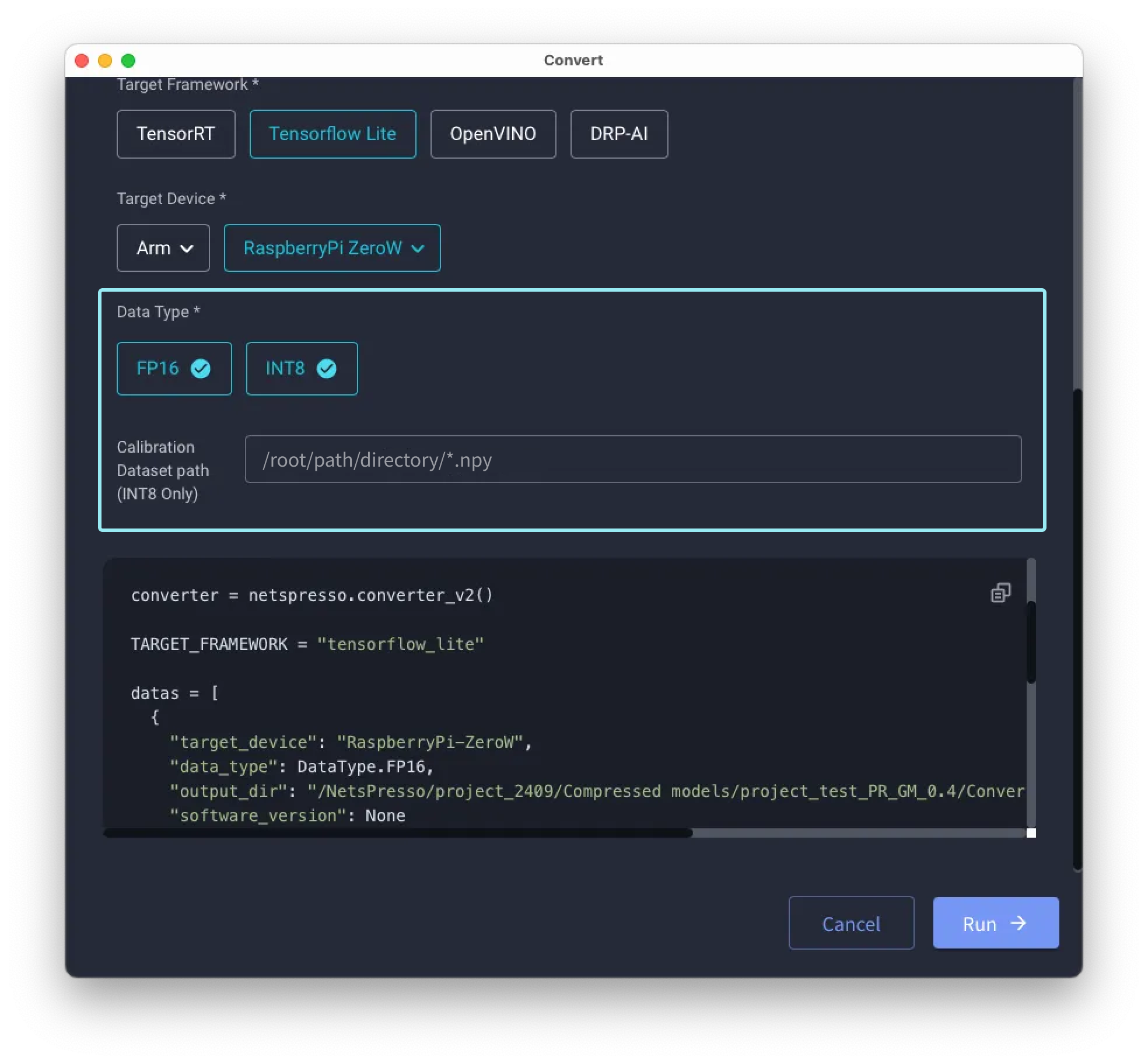

Convert Configuration

Please check the path and target model displayed in the Target Model Directory.

And configure the experimental settings as follows:

- Target Framework

- Target Device

- Data Type : Some models support either FP32 or INT8 formats. The calibration dataset is optional; however, if provided, the format must be in NumPy format, and the full path should be provided as a string.

Updated 6 months ago

What’s Next