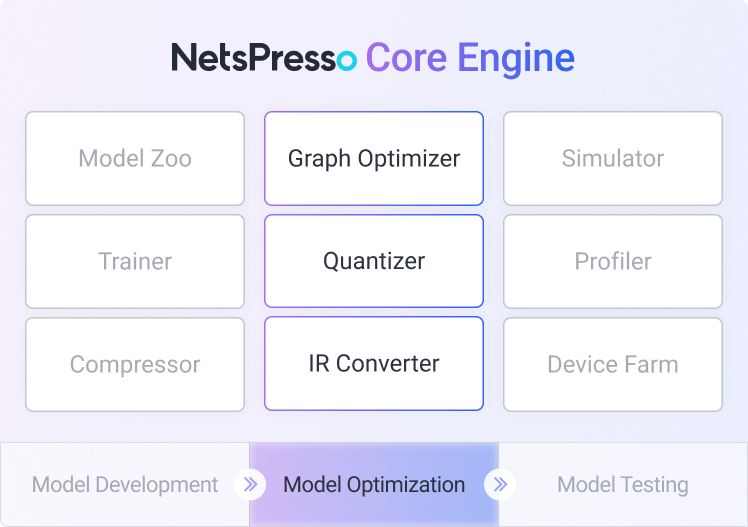

Stage 2: Model Optimization

The goal of this stage is to convert models into hardware-friendly formats and improve runtime performance.

Graph Optimizer

- Simplifies and restructures computational graphs for faster and more efficient inference.

- This includes layer fusion and operator replacement.

NetsPresso advantages:

- Automatic graph optimization after compression and quantization

- Improved inference latency and deployment stability

Quantizer

- Converts model weights and activations into lower-precision representations such as INT8.

- It supports calibration with sample datasets to maintain accuracy after quantization.

- INT8 quantization with Optimization Studio

NetsPresso advantages:

- Cutting-edge post-training quantization with minimal accuracy loss

- Built-in calibration workflow for robust performance on various datasets

- One-click INT8 export for TensorRT, TFLite, and more

IR Converter

- Converts models into intermediate representations suitable for cross-framework transformation.

- Features & Scope of support

NetsPresso advantage:

- Multi-platform support (NVIDIA, Qualcomm, Renesas, Intel, and more)

- Automated model structure adjustment for maximum device compatibility

- No need for manual conversion or re-writing

Updated 9 months ago

What’s Next