Method: Structured Pruning

Model Compression

The goal of model compression is to achieve a model that is simplified from the original without performance deterioration. By compressing the large model, the user can reduce the storage and computational cost and allow to use in real-time applications.

NetsPresso supports the following compression methods.

- Structured Pruning

- Filter Decomposition

This page describes for Structured Pruning.

What is "Pruning"?

Pruning is the process of removing individual or groups of parameters from a complex model to make it faster and more compact. This compressing procedure is divided into unstructured pruning and structured pruning by the pruning objects.

- Unstructured Pruning : Removes individual parameters and returns a sparse model, which requires an additional device to be accelerated.

- Structured Pruning : Removes entire neurons, filters, or channels and returns a model, which does not require any particular hardware or software to be accelerated.

The goal of pruning is to reduce the computational resources and accelerate the model by removing unnecessary filters (Model Compressor only supports structured pruning. Unstructured pruning will be published in near future.).

However, the fine-tuning process is necessary to compensate for the loss of accuracy.

Structured Pruning

Supported functions

Pruning in Model Compressor provides two pruning functions (Pruning by Channel Index / Criteria) and one recommendation (SLAMP) to fulfill the user's demand on model compression.

- Pruning by Channel Index

Removes the filters that a user wants to. If the selected filters are redundant or less important, it will return a better performing model. - Pruning by Criteria

- L2 Norm

: L2-Norm is used to represent the importance of the corresponding filter. In other words, this method prunes filters based on the magnitude of weights.

- Nuclear Norm : The nuclear norm is the sum of the singular values representing the energy. It computes the nuclear norm on the feature map to determine the filter's relevance. For this reason, a portion of the dataset is needed. For more detail, please refer to the following paper.

- Geometric Median : Geometric Median is used to measure the redundancy of the corresponding filter and remove redundant filters. For more detail, please refer to the following paper.

- Normalization

- The distribution and magnitude of the layers are varied, it is vital to compare those different distributions from the same perspective. For this reason, all of the criterion values are normalized by layer as follows.

- The distribution and magnitude of the layers are varied, it is vital to compare those different distributions from the same perspective. For this reason, all of the criterion values are normalized by layer as follows.

- L2 Norm

: L2-Norm is used to represent the importance of the corresponding filter. In other words, this method prunes filters based on the magnitude of weights.

- "Recommendation" in Model Compressor

The "Recommendation" enables a so-called global pruning, which allocates the pruning ratio for each layer at ease. Current version only supports SLAMP.- SLAMP (Structured Layer-adaptive Sparsity for the Magnitude-based Pruning)

- SLAMP is inspired by the "Layer-adaptive Sparsity for the Magnitude-based Pruning" from ICLR 2021, which is called LAMP.

- Layer-Adaptive sparsity for the Magnitude-based Pruning (LAMP) is an unstructured pruning method, but here, it is modified and developed to measure the layer-wise importance for the Structured pruning.

- Normalization function

- Following normalization function is adopted into the above criteria value.

- SLAMP (Structured Layer-adaptive Sparsity for the Magnitude-based Pruning)

What you can do with Model Compressor

- Choose one of "Pruning by Channel Index" or "Pruning by Criteria" by the purpose of the user.

- "Pruning by Channel Index" is recommended for the expert, who already knows which filter is unnecessary to solve the issue.

- "Pruning by Criteria" is recommended for the user who wants to prune certain amounts of ratio on specific layers or beginner of the model compression.

- To use "Pruning by Channel Index"

- Check "Pruning by Channel Index".

- Check the layers to be pruned.

- Insert the filter index to be pruned (ex. 105, 8, 9, 11-13).

- To use "Pruning by Criteria".

- Check one of the criteria (ex. L2-Norm) to calculate the importance score of each filter.

- Prune specific layers with a certain amount of ratio.

- Check the layers to be pruned.

- Insert the amounts of the ratio to be pruned (ex. 0.2).

- Compress whole layers automatically for the given amounts of ratio.

- Press "Recommendation" and insert the amounts of the ratio (ex. 0.8) to compress the model.

Verification of the Structured Pruning

Pruning in Model Compressor allows to remove the specific filters and preserve the previous knowledge. To verify the pruning method in Model Compressor, we assess the categorical accuracy of the returned compressed model and its fine-tuned model.

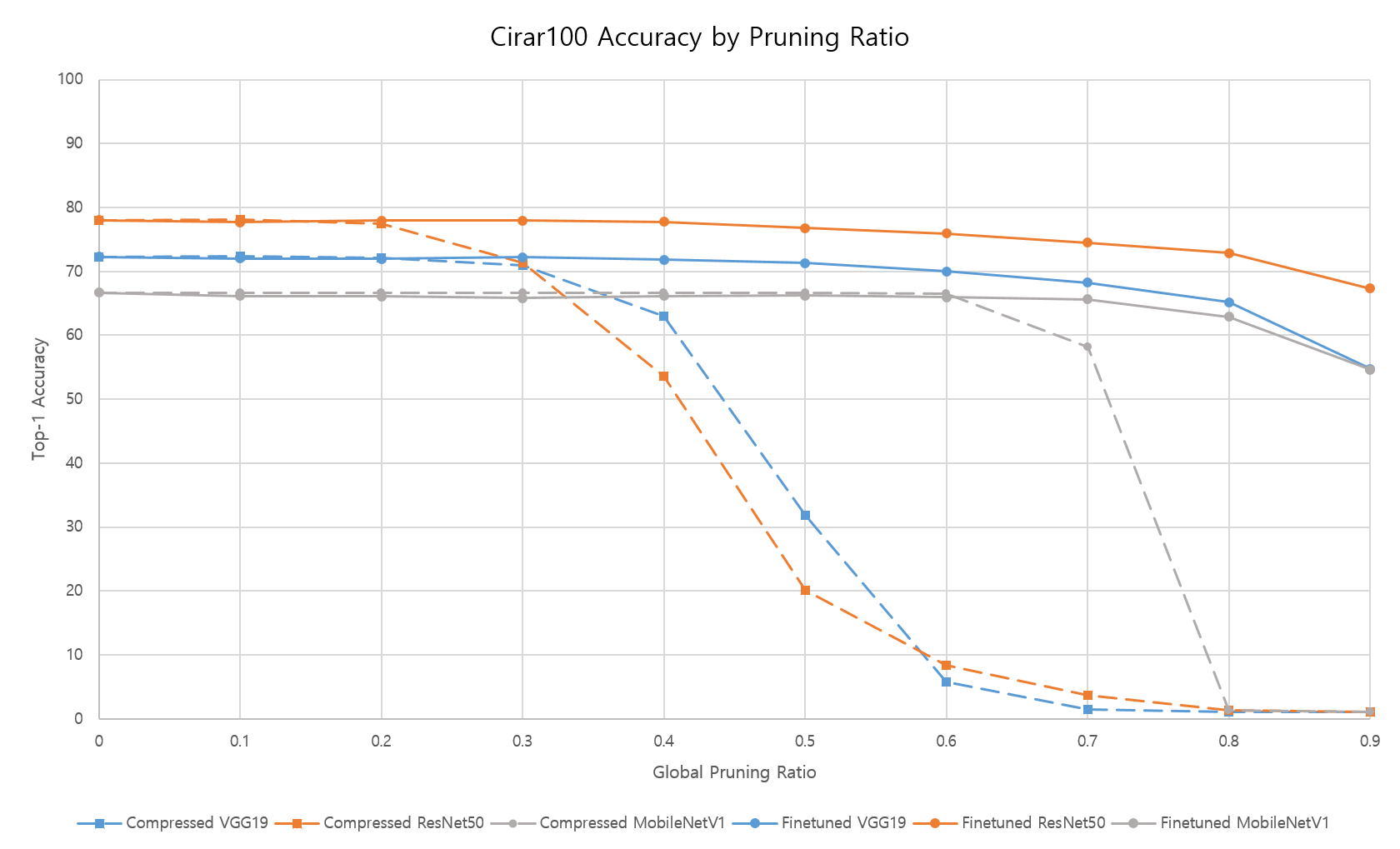

- Cifar100 Accuracy of the Compressed Model and Fine-tuned Model

- The compressed models are compressed by the following strategies: L2Norm, SLAMP, intersection. (Original models are from here)

- The dashed and solid lines in the above image indicate the compressed model from Model Compressor and the model after fine-tuning, respectively, for the given pruning ratio.

Not Supported Layers

- Group convolutional layer is currently not supported and will be updated in the near future.

Updated 11 months ago